Human-Centric AI Ethics

One of the essential objectives is to get to the point where the ValuesCredentials

Human Centric AI has an implied relationship to what might be considered a very different form of 'digital identity' construct; which is only really operationally employable via an agent.

Whilst the notes about sense are more specifically about an underlying system that is required to support the proper use of vocabulary in a way that consequentially supports the use of other systems that depend upon the ability to use sense (or something like it); there are some meaningful implications relating to the use of vocabulary / language, that in-turn link to the topics that are discussed in these notes.

Introductory Notes

It has been a continual area of annoyance that ‘ai ethics’ folk; increasingly, involved due to the significant ‘value’ associated to contracts in the area; but seemingly, devoid of a variety of prerequisite.

Whilst multi-nationals and large institutions go about forming ‘ai ethics’ bodies of work (seemingly not cheap) who are speaking and writing policies about AI Implementations, the subject of their considerations relating to ethics more often relates to systems that are designed to be owned by large corporations - not humans.

Whatever the cause, not much has been done to ensure people can own their own AI Agents; and without that infrastructure, the ability to ensure that human beings have support via electronic systems, digital / web / ‘ai’ systems; even just, to seek access to justice in circumstances that may well lead to innocent & injured people - is not easily achieved.

People are subjected to organised violence - ending their lives, severely injuring their quality of life, or having their lives ended - in-effect or materially due to wrongs; that, In my opinion, any good ‘ai ethics’ system - should have reasonable capacity to better understand, sooner.

The problem is that large entities do not make systems that expose them to legal repercussions willingly. Consequently, these ‘mainframe’ like - money first policy framework, engenders a form of moral poverty that is, to me, simply detestable.

I hear from staff members of politicians offices; who suggest, nothing i’ve done has impacted ‘government policy’, whilst the fools are logging into systems that are being developed to in-part depend upon infrastructure that i was involved in creating, globally. Yet, in my opinion, this isn’t about honesty, its about violence. Systems that act violently as to in-turn prevoke violence, as the underlying series of assumptions are built upon the acceptability and barriers engendered to limit lawful recourse when persons are disaffected by PublicSectorWrongDoings. Whilst an extrodinary amount of effort may well see a person be able to have a problem that has been disaffecting them addressed; often, the apparent belief is that any such adjustment should in and of itself be considered lawful remedy, regardless of the broader repercussions any such wrongs may have had upon the target of wrong-doings and/or the intentional employment of SocialAttackVectors because of how AI is designed to support some SafetyProtocols without any form of meaningful support for others, including some of those defined already in these documents.

Notably also; During the course of my R&D works in relation to these ecosystems, it has been made apparent to me that they are less likely to engage in wrong-doing when dealing with professional criminals. It has been suggested to me, that they prefer to target law abiding citizens because its far easier than targeting criminals, who have advanced their skills as a requirement relating to their profession as a criminal. In-effect, that they’re too hard to sort out; so, they go after the good actors, who they know, they can assault without consequence because - they’re good actors, unwilling to break the law regardless of how it is these people, assault our values, as a society overall.

So.

Defining 'Human-Centric Ai Ethics'

The implication for defining “Human Centric Ai Ethics’ relates to WebScience considerations; when modelling ArtificialMinds as to support ‘reality check tech’. Human Centric AI is for those who want to build solutions that improve our capacity to sort out bad actors via courts; via the production of solutions that provide ‘democratisation’ of electronic evidence. This in-turn supports foundational requirements considered necessary in-order to support the ability to do real-world ‘ai ethics’ in ways that was previously impossible as a consequence of designs.

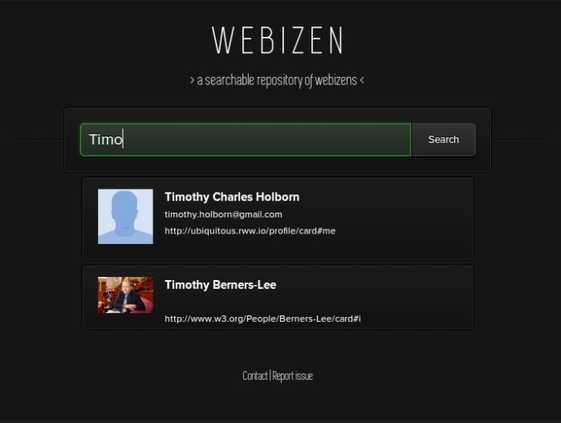

The Founder of Web Civics is actively working on an HumanCentricAI ecosystem solution implementation that is called Webizen.

Webizen systems are different to modern global cloud 'Mainframes', that are built to support the requirements of rulers - not rights, human rights or other words; has nothing to do with ‘ethics’ in a world, that is said to be about building peace infrastructure. Yet whilst he continues to progress this long-term project that has had such a big role in defining his life, the underlying purpose of the works - is all about the sorts of things described by in the instruments referred to in the ValuesCredentials documentation; whilst the now global credentials work that is often said to be 'digital identity', that is a derivative of works he helped to establish sadly, those who became prompted to leadership roles; never quite got to the point where many of the usecases related to SafetyProtocols including but not limited to ValuesCredentials were just not part of what ended-up being globally implemented as ID.

In consideration;

This video below provides a very quick summary of the difference between rights and rulers.

Economic Benefits of Ethics for Moral Economy Systems

Systems, that are designed to support acts of violence with legal impunity, due to structure of systems designs; and other political-sciences related manoeuvres, don’t cost less. Rather, there is a propensity to engender or employ various types of SocialAttackVectors in an effort to negate responsibility for whatever the underlying problem actually is; terms that describe these sorts of behaviours include "passing the buck" and obstruction. Innately, these sorts of behaviours incur additional costs whilst failing to deliver an outcome that needs to be resolved in some way, even if the outcome that is engendered is that the victim ends-up dead. When these sorts of things are exacted upon persons, it has a ripple effect across a multitude of persons, and overall - doesn't improve productivity, support human rights or increase the capacity for gainful revenues to become developed via the success of policies relating to SafetyProtocols, ValuesCredentials, WebScience or various other measures.

What is Ethics?

In the diagram, produced in 2018, as provided below; the distinction between 'moral responsibility' and 'ethical responsibility' is illustrated.

![[2018-9TheCollectiveInfoSphere.webp]]

![[1_iGzdEyUWAzT7TDjU6IUvTQ.webp]]

Therein - the distinction becomes one where every individual human being owns the moral responsibility that is associated to his or her actions; yet, in a group situation, the utility of that moral responsibility to make their own personal choices in any given situation may not result in an outcome that is consistant with their view; as such, the prevailing outcome is considered to be the representation of a groups ethical responsibilities.

As a consequence of there being very little support for individuals (or indeed also, lawful remedy in relation to wrong-doings); there are various distortive effects upon 'ethics' generally, and in-turn also those that relate to the creation of active software agents, that we manifestly call - Artificial Intelligence or AI.

HumanCentricAI Differences

Through the use or application of 'AI Ethics' in relation to HumanCentricAI ecosystems, ‘ethics’ or various values statements that are said to be the guiding instruments bound to their terms of employment; are in-turn subject to semantic ecosystems that ensure they’re not provided the right to assault the human rights of children, or their loved ones as to exploit children.

Actions that may be considered to be part of a persons authorised behaviours in connection with an employment contract that may seek otherwise, to provide impunity without insurable (or uninsurable) risk / liability - becomes contextualised via the ability of others to seek lawful consideration via courts of law.

So the benefit, of HumanCentricAI for people who actually care about ‘ethics’ or moral equities, socio-economic systems, solutions and the means to use technology as a series of tools to support the interests of our human family; is that through means, to democratise ‘ai servers’ so that there’s a means to include, consideration for natural justice, for the needs, rights, values and considerations of importance for natural persons, in their private capacity; regardless of any other role they may hold in our societies, that there’s now a technical means to figure out how it is, we’re able to make them more meaningful than before.

As such, new opportunities become available to form more appropriate approaches that can better support the common understanding of what terms like 'ethics' should mean, particularly in relation to public policy and related (endorsed) public / private sector activities.

Do You Want to Get Involved?

We're just starting to invest more into this initiative, if you're interested in getting involved please checkout the WebCivicsCharter which will in-turn provide the links to the community sites.